″[Advanced Intro to AI Alignment] 2. What Values May an AI Learn? — 4 Key Problems” by Towards_Keeperhood

Description

2.1 Summary

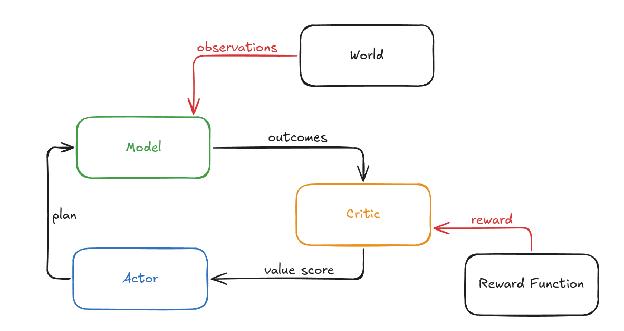

In the last post, I introduced model-based RL, which is the frame we will use to analyze the alignment problem, and we learned that the critic is trained to predict reward.

I already briefly mentioned that the alignment problem is centrally about making the critic assign high value to outcomes we like and low value to outcomes we don’t like. In this post, we’re going to try to get some intuition for what values a critic may learn, and thereby also learn about some key difficulties of the alignment problem.

Section-by-section summary:

- 2.2 The Distributional Leap: The distributional leap is the shift from the training domain to the dangerous domain (where the AI could take over). We cannot test safety in that domain, so we need to predict how values generalize.

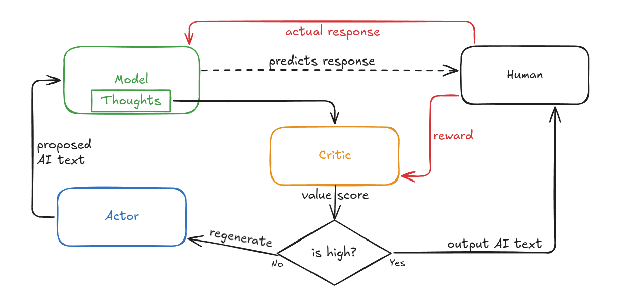

- 2.3 A Naive Training Strategy: We set up a toy example: a model-based RL chatbot trained on human feedback, where the critic learns to predict reward from the model's internal thoughts. This isn't meant as a good alignment strategy—it's a simplified setup for analysis.

- 2.4 What might the critic learn?: The critic learns aspects of the model's thoughts that correlate with reward. We analyze whether [...]

---

Outline:

(00:16 ) 2.1. Summary

(03:48 ) 2.2. The Distributional Leap

(05:26 ) 2.3. A Naive Training Strategy

(07:01 ) 2.3.1. How this relates to current AIs

(08:26 ) 2.4. What might the critic learn?

(09:55 ) 2.4.1. Might the critic learn to score honesty highly?

(12:35 ) 2.4.1.1. Aside: Contrast to the human value of honesty

(13:05 ) 2.5. Niceness is not optimal

(14:59 ) 2.6. Niceness is not (uniquely) simple

(16:02 ) 2.6.1. Anthropomorphic Optimism

(19:26 ) 2.6.2. Intuitions from looking at humans may mislead you

(21:12 ) 2.7. Natural Abstractions or Alienness?

(21:35 ) 2.7.1. Natural Abstractions

(23:15 ) 2.7.2. ... or Alienness?

(25:54 ) 2.8. Value extrapolation

(27:49 ) 2.8.1. Coherent Extrapolated Volition

(32:48 ) 2.9. Conclusion

The original text contained 11 footnotes which were omitted from this narration.

---

First published:

January 2nd, 2026

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

![″[Advanced Intro to AI Alignment] 2. What Values May an AI Learn? — 4 Key Problems” by Towards_Keeperhood ″[Advanced Intro to AI Alignment] 2. What Values May an AI Learn? — 4 Key Problems” by Towards_Keeperhood](https://is1-ssl.mzstatic.com/image/thumb/Podcasts211/v4/a6/b7/1a/a6b71a43-fe2a-3637-c30c-64e41955b16d/mza_8291033219098401263.jpg/400x400bb.jpg)